Epoch 1.A

1994-1995

1994 - University years, computer science applications in finance.

My first works consisted in the implementation of MCMC (Monte Carlo methods for Markov Chain) and Bayesian statistics in the probabilistic interpretation and optimization of financial risk models.

The quality of random numbers used in these simulation methods is fundamental. Generally CPU numbers are good enough, but not for randomness purists.

I experimented with these simulation methods in text-generating creative coding.

The next text is made with randomly sampled words from Englsh dictionary by Markov Chain process mixed with randomly generated 3-letter words (inspired by Assembler language commands) which are intended to act as triggers so as the reader's mind replaces them by its own words.

This way the final result is a co-creation between randomness and the reader.

[ MCMC song ]

1995 - I had developed a basic 2 layers pre-CNN (a predictor model based on the principles of convolutional neural networks, but this term didn't exist at that time) for experiments with methods of technical analysis in financial forecasting and simulations for automated trading applications, which became later the theme of my Master's graduate thesis.

That's how the Roamer software model was created and trained for the optimization of a technical analysis system hyperparameters.

[ A sample of the Roamer code written in Borland Delphi Pascal ]

This model consisted of a mix of MACD (moving average convergence divergence) and SCMA (stochastic crossover moving average) and in one of its versions also of Bollinger bands.

Simply put it was an optimization task aiming to find a global minimum in a multidimensional space by "visual" recognition.

It was named Roamer because of its sliding moving average window.

Also because in this model training process I used a technique similar to the Reversible-jump (a variant of the Metropolis-Hastings algorithm that allows the dimensionality reduction) for the optimization of MACD hyperparameters and for the synaptic weights distribution of neural network in gradient retropropagation process , which similarly to the Markov Chain employs stochastic "walkers" that randomly move around and distribute probabilities or randomly sample a multi-dimensional set of data.

To initiate the process of gradient descent one needs to put weights into sigmoid nodes.

A virgin Machine Learning model should be like a blank canvas.

The theory says the better and more unbiased are random numbers - the better are training results.

Firstly I used CPU random generator, but later the same year had discovered the RAND Corp numbers ("A Million Random Digits with 100,000 Normal Deviates") from 50-ies, produced by an electronic rerandomization of a roulette wheel.

This book was a reference and the largest and most unbiased dataset at this time.

Still contained small but statistically significant biases, that's why I used a double rerandomization method - sampling this database in a random way (CPU randomizer over the RAND set).

In some cases I especially liked to use the numbers from the page 40 containing the random sets 1995-1999 as a reference to the present times.

[ RAND numbers, page 40 ]

I began to use the same data and the same software for some of my artworks.

If the Roamer is trained on random numbers it produces nothing in the end, there is no real global minimum on the learning surface, just a chaotic replication of the initial noise.

But if the training session is halted at a certain point, then this undertrained model produces some figurative appearance, a sort of cloud formations.

Then the initial set had to be denoised and cloudy structures had to be sharpened by the application of a mix of K-means and nearest neighbours methods.

In a nutshell it is a 2D dynamic heatmap visualization of synaptic weights of an undertrained neural network model which is trained on purely random data, with a lot of synaptic momentum.

Visualizing the reality by demolishing the perfect chaos with fumbling AI.

[ 2D Clouds - visualisation of the Roamer CNN hyperparameters training data ]

[ A Roamer CNN haiku. Partially co-generated with a human-in-the-loop sampling in order to make distinction between nouns, adjectifs and verbs ]

[ ASCII asterisk random snow, a reinterpretation of the previous 2D clouds ]

[ Snow Totem - ASCII asterisk random snow condensed into a pillar ]

[ Random Totem - a symmetric pillar where every block's ASCII symbol, length and height are purely random ]

Epoch 1.B

1996-1999

1996 - I began to use the HotBits, a Swiss FourmiLab random numbers service (discontinued) , based on the timing of successive pairs of radioactive decays detected by a Geiger-Muller counter connected to a computer.

This is a quantum level spontaneous randomness, as the process is happening on the atomic nondeterministic level and is conditioned by the probabilistic nature of quantum mechanics.

From an extreme perspective this process might be considered as situated midway between classical and quantum mechanics, because although individual decay events are truly random, the statistical behaviour of a large number of radioactive atoms exhibits a regular and predictable pattern (half-life of the radioactive substance).

Good source of randomness, but not applicable on industrial level computing applications as its generation rate is limited (about 100 bytes per second).

[ HotBits internal setup. © FourmiLab ]

Next works are a collaboration between quantum non-deterministic true trandomness and human (semi-)deterministic randomness, provided that an artist is considered as a machine that repeats learned patterns and belongs to Newtonian classical mechanics predictable realm.

[ The Pure Elephant - a pencil, ink and watercolour hand drawing where quantum-level true random numbers are used in order to determine the colour and width of these "barcode" structures, to inject the "Nature's DNA" ]

[ The New Mongol System ]

[ Perfect Chaos Decay Qnt 21 - a single stroke stretchart work on a true random bitmap 3000x2000px.

You may see some patterns in the background like large regular transparent squares, it is due to the small screen fit; there is none on a larger screen ]

1997 - I discovered the LavaRand online randomizer service a.k.a. "Wall of Entropy" (lavarand.sgi.com) which used cryptographic hashing of the camera output filming a wall filled with lava lamps - designed by Silicon Graphics and based on the 1996 work "Method for seeding a pseudo-random number generator with a cryptographic hash of a digitization of a chaotic system" by Landon Curt Noll, Robert G. Mende, and Sanjeev Sisodiya.

While LavaLamps service was discontinued, Cloudflare - the leading company specialized in HTTPS encryption - drew inspiration from Silicon Graphics system and created a similar installation generating physical entropy as an extra layer of defense for their cryptographic systems that secure internet traffic.

[ LavaRand wall. © Cloudflare / Silicon Graphics ]

[ Radio Lava X18 - 2D hyperparameters visualisation of the Roamer stochastic gradient descent over a mix of HotBits and LavaRand data ]

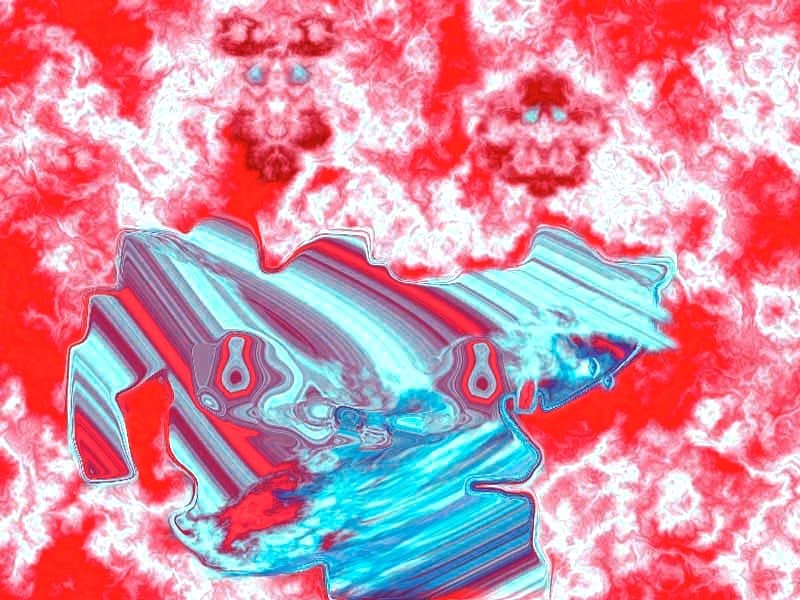

I also liked to play with these outputs trying to find faces within. A pareidolia game.

Mirroring half-faces of the half-life:

[ Red Blue Baron ]

[ Red Blue Baron ]

[ Pure Field, Dusk X5 - a sequential linear gradient based on a mix of HotBits and LavaRand data]

One of the Pure Fields creation algorithms: first random number defines color, second one sets the width of the colour field and the third one defines the feather (the gradient extent between consecutive colours).

The Pure Fields are somehow similar to my previous Random Totems, but instead of ASCII symbols, they are produced by the visualisation of micro-lined array of joint gradients.

This is full-width color Totems.

Also a sort of inversion of Von Neumann unbiasing procedure.

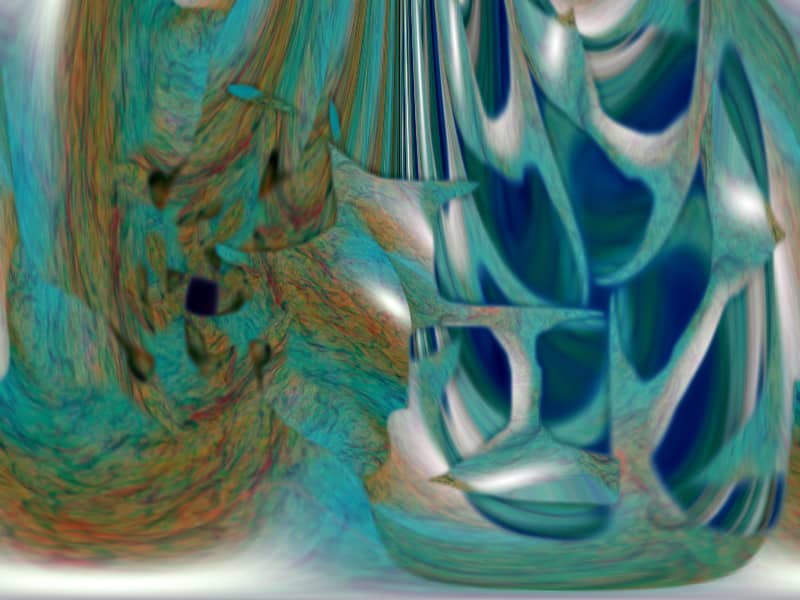

Next works are a result of the combination of diverse previously developed stochastic gradient descent methods and generative use of the Roamer model over diverse random data arrays with digital painting superposition.

Later I re-synthesized and replicated this approach in various combinations and manners until the year 2020.

[ Summer flowers / Radio Lava ]

[ Random forest / Radio Lava ]

[ Little boat / Radio Lava ]

October 1997 - my first personal exhibition "Demons of Maxwell" - based on the ideas on crossroads of entropy, irreversibility, information theory and deterministic nature of Newtonian classical mechanics, inspired by the thought experiment of James Clerk Maxwell in 1867.

This Maxwell's work also inspired the idea of quantum tunnelling and tunnelling of a classical wave-particle association, and the evanescent wave coupling (the application of Maxwell's wave-equation to light).

[ The exhibition press-release in a computers magazine. Picture サ undoxed ]

1998 - I also began to use the Random.org - an online service providing the random numbers from atmospheric noise generated by thunderstorms.

Experimented in training my Roamer model for this noise prediction, to predict the pure randomness, to test its pureness.

[ Generator structure: Hitachi transistor radio, Sun SPARC station, whiskey bottles. © Random.org, Distributed Systems Group at Trinity College, Dublin ]

Digital thunderstorms derivative from natural thunderstorms.

Making art with no idea or intention, ultimately diminishing the artist's participation in the process.

The real WuWei, non doing, non authorship.

The art made by the nature.

Some of the next works were displayed at my 1998 exhibition "WuWei or The Dog that walks on its own"

[ Sunflower / WuWei - stochastic process over the atmospheric random data ]

[ Dog / WuWei - atmospheric random data, stretch art and wave art ]

[ Inner Tree / WuWei - atmospheric random data ]

[ Random Petrel / WuWei - thunderstorms random data ]

[ Not a Phoenix / WuWei ]